Three years into the generative AI boom, the challenge is no longer whether to adopt AI — but why so few initiatives make it beyond pilots.

This article, explores why most AI products fail to scale — and what the small group of high performers do differently.

As early adopters working hands‑on with AI long before it became an enterprise buzzword, we’ve been seeing this gap up close. While helping large organisations experiment with and deploy AI across real products and workflows, the same challenges kept surfacing again and again.

What makes this moment particularly telling is that McKinsey’s 2025 global survey now confirms the same pattern at scale. What we observed project by project is now visible across industries, regions, and enterprise sizes.

Context: AI Is Everywhere — Value Is Not

Nearly every enterprise today uses AI in some form — from copilots and chatbots to recommendation engines and internal automation. On the surface, this looks like progress.

But beneath that surface, a different reality emerges. Despite impressive demos and promising pilots, AI often stalls before it becomes a real driver of growth. The gap between experimentation and enterprise impact remains wide — and it isn’t accidental.

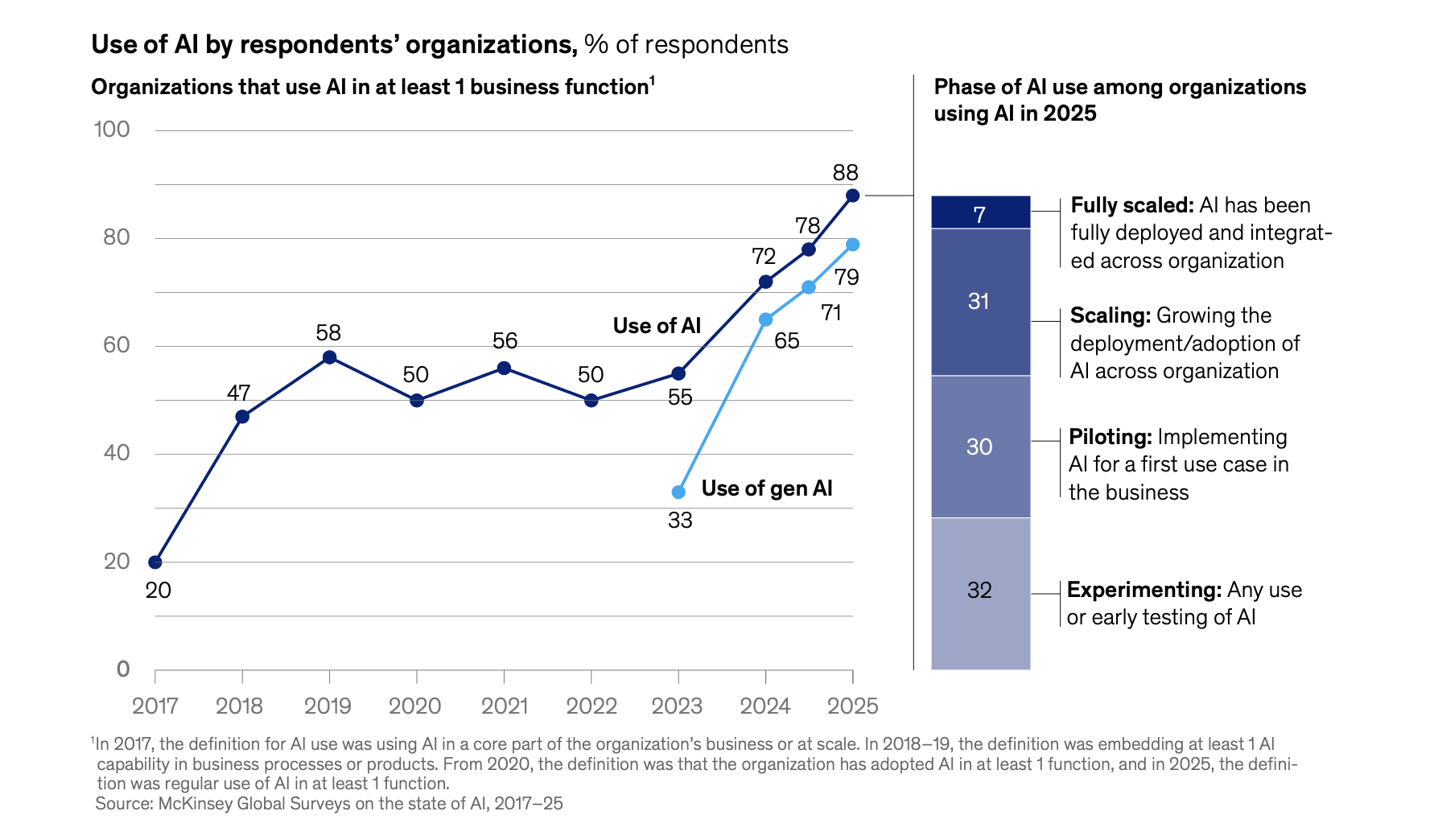

Reported use of AI in at least one business function continues to increase. Source: McKinsey & Company

Based on McKinsey’s 2025 global AI research and our hands‑on experience designing AI‑native products for enterprises, a consistent pattern emerges: companies that scale AI successfully treat it as a product and organisational transformation — not a tool rollout.

The AI Scaling Illusion

AI adoption today is widespread. Nearly every large organisation uses AI in at least one business function, and many use it across several — marketing, IT, product development, operations. Yet, maturity lags far behind adoption.

McKinsey’s 2025 research shows that close to two‑thirds of organisations remain stuck in experimentation or piloting, while only a minority have managed to scale AI across the enterprise. Even fewer report meaningful financial impact.

Larger companies lead the way in scaling AI beyond pilots. Source: McKinsey & Company

In other words, AI has become common. Scalable AI has not. This disconnect creates what we call the AI scaling illusion: organisations appear advanced on the surface, while underlying workflows, decision models, and ownership structures remain unchanged.

Only 39% of respondents attribute any EBIT impact to AI, and in most cases that impact is still below 5%. The conclusion is clear: using AI is no longer a differentiator. Scaling it is.

What Actually Separates AI High Performers

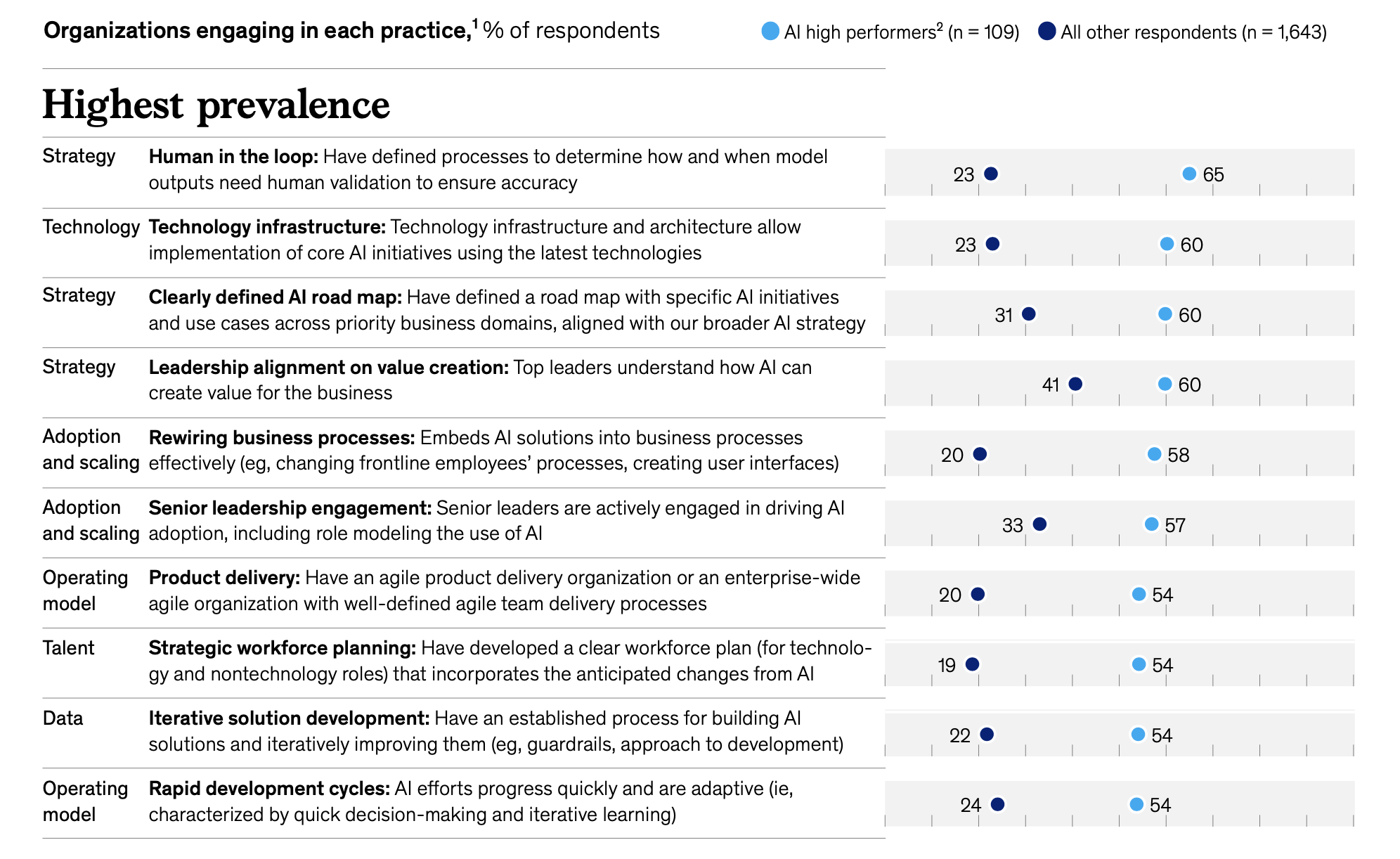

McKinsey identifies a small but telling group — roughly 6% of organisations — that report both significant value from AI and a measurable EBIT contribution. These AI high performers offer clear signals of what actually works.

They are not distinguished by better models or higher AI spend alone. They stand out because AI is deeply embedded into their operating model, product strategy, and decision‑making structures.

They design for growth — not just efficiency

Most AI initiatives start with cost reduction: automating repetitive work, speeding up internal processes, and trimming operational overhead. These gains are real — but limited.

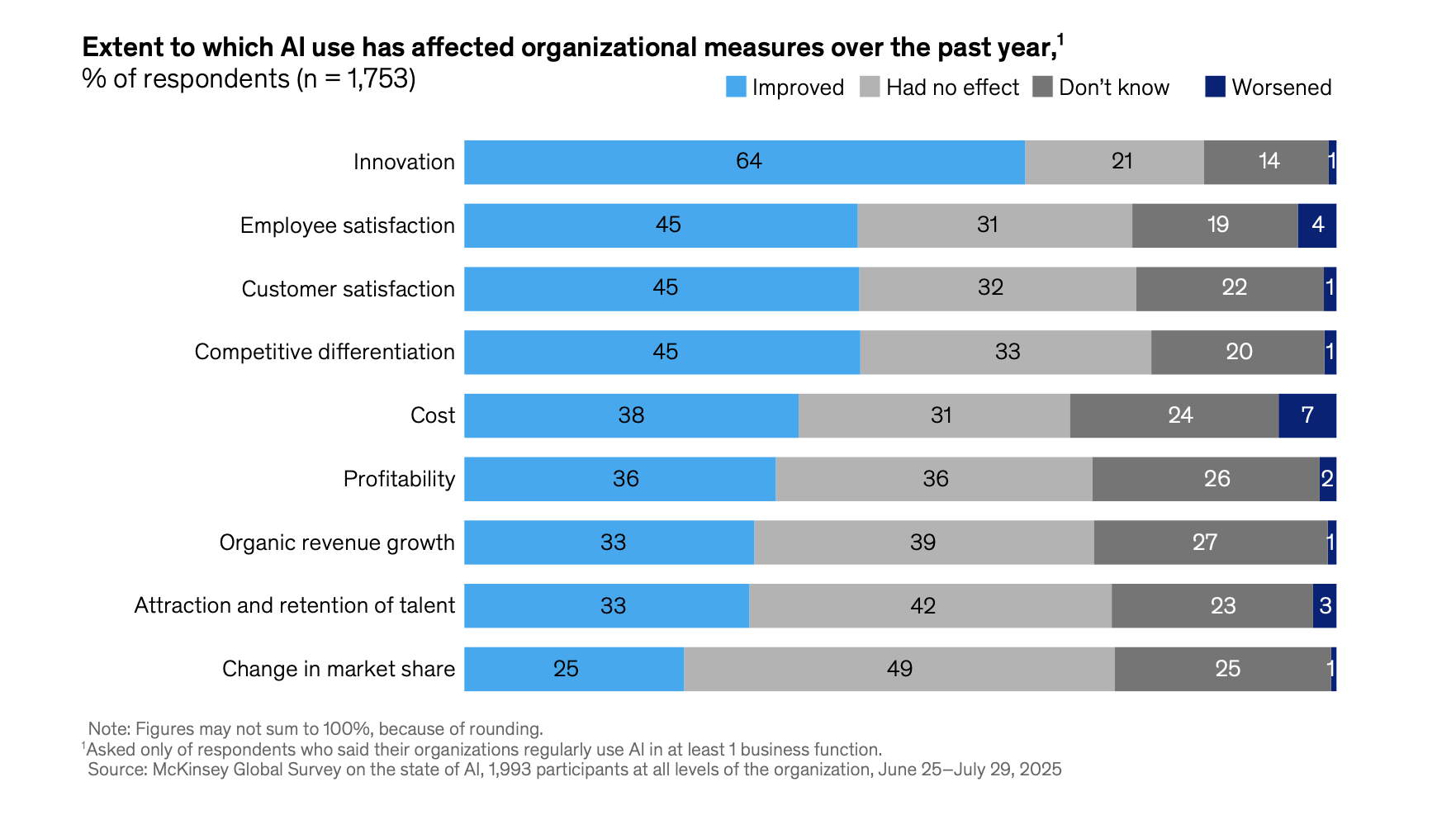

Respondents most often cite benefits from AI in innovation, employee and customer satisfaction, and competitive differentiation. Source: McKinsey & Company

High performers go further. They use AI to:

- unlock new product capabilities,

- personalise user experiences at scale,

- and create faster feedback loops between users, data, and decisions.

AI becomes a value‑creation layer, not just a back‑office optimisation tool.

They redesign workflows — not just add AI on top

One of the strongest predictors of AI success is fundamental workflow redesign. McKinsey’s data shows that AI high performers are nearly three times more likely than others to rethink how work actually happens when AI is introduced.

This matters because AI does not behave like traditional software. It changes where decisions are made, how often they are made, and who is involved in them. When AI is layered onto existing processes without redesign, complexity increases — and adoption suffers.

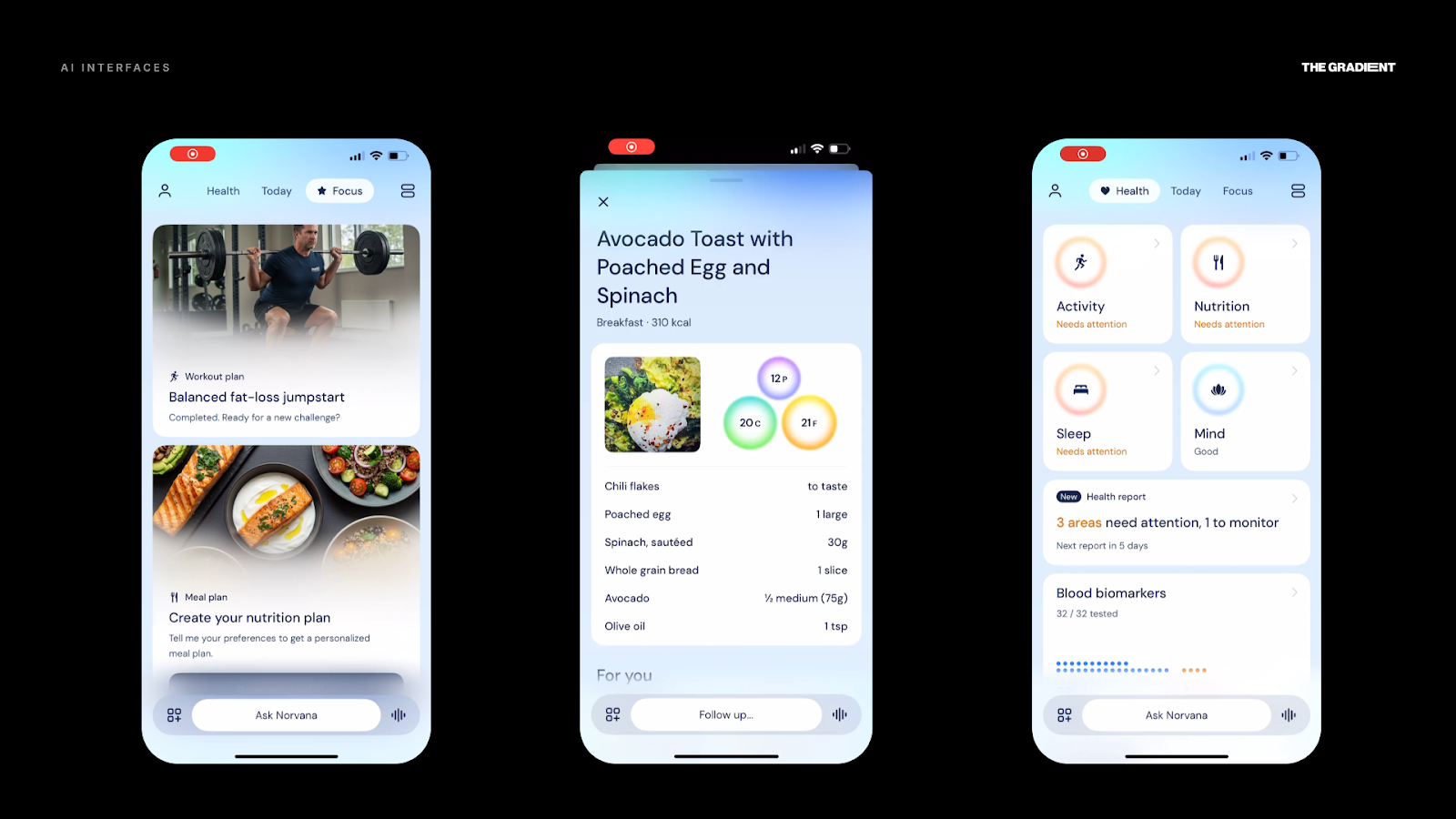

When working on Norvana, an AI‑powered personal health companion, we encountered a familiar temptation: use AI to generate more insights, track more signals, and surface more data. On paper, it looked powerful. In reality, it risked overwhelming users.

User research told a different story. People didn’t want another dashboard. They wanted clarity, reassurance, and guidance at the moments that mattered.

Instead of expanding feature sets, we redesigned the workflow itself:

- shifting from constant tracking to context‑aware nudges,

- turning AI from an analyst into a behavioural coach,

- and designing conversations instead of data‑heavy screens.

Norvana, AI-powered personal health companion interface with personalised workouts, meals, and health insights

By reducing friction rather than increasing intelligence, Norvana’s AI became easier to trust — and easier to adopt. The result wasn’t “more AI,” but better integration into daily life, aligned with how people actually behave.

They treat AI as a product — not a side initiative

AI that scales is almost always owned by product teams, not just IT or data departments.

High performers apply classic product disciplines to AI:

- clear ownership and roadmaps,

- iterative delivery and validation,

- success metrics tied to user and business outcomes,

- and close collaboration between design, engineering, and domain experts.

In practice, scaling AI looks far closer to building a platform than deploying a tool.

What Blocks AI Scaling

AI initiatives fail to scale when intelligence is added without redesigning how work actually happens.

Across industries, the barriers to scaling AI are remarkably consistent — and largely non‑technical. McKinsey’s findings, combined with our delivery experience, point to five recurring blockers:

- Pilot‑first thinking: AI initiatives remain trapped in proofs of concept with no clear path to productisation.

- Lack of workflow ownership: AI is introduced without redefining who owns decisions, exceptions, and outcomes.

- Weak product governance: No clear AI roadmap, success metrics, or prioritisation model tied to business value.

- Trust and explainability gaps: Users are expected to rely on AI outputs they don’t fully understand.

- Missing human‑in‑the‑loop design: Validation and escalation mechanisms are absent — especially in high‑stakes domains like finance and healthcare.

These blockers explain why AI often performs well in demos but breaks down under real operational complexity.

Organizations seeing the largest returns from AI are more likely than others to follow a range of best practices. Source: McKinsey & Company

The Missing Layer: Product & UX Thinking for AI

McKinsey’s research repeatedly points to the same conclusion: technology readiness alone does not create value. AI systems succeed or fail at the point where humans interact with them. This is where product and UX design become critical.

Designing AI means designing:

- decision surfaces, not just outputs,

- clear boundaries and feedback loops for agentic systems,

- trust through transparency and recoverability — not accuracy alone.

In regulated industries like fintech and healthcare, AI must support human judgment, not obscure it. Without this layer, AI remains impressive — but brittle.

From Experiments to Scaled Impact: What Actually Works

When enterprises do manage to scale AI, a few patterns consistently show up.

- Fintech: AI is embedded into core decision flows (risk, fraud, credit), with clear human escalation and explainability.

- Retail & e‑commerce: AI recommendations are integrated into the full customer journey, improving conversion, retention, and inventory efficiency.

- Healthcare: AI supports triage, prioritisation, and behaviour change rather than replacing clinical judgment.

With another one of our cases, Lumiere, an AI‑powered video intelligence platform, the challenge was not model capability — it was adoption at scale. AI insights existed, but lived in silos. Analysts, creatives, and commercial teams each saw fragments of value without a shared decision flow.

We shifted the focus from automation to orchestration:

- aligning AI outputs to concrete decision moments,

- tailoring insights to different roles,

- embedding AI directly into existing creative and operational workflows.

The impact was tangible:

- faster insight‑to‑decision cycles,

- broader internal adoption of AI‑powered tools,

- and greater use of AI insights in client‑facing decisions.

By reducing cognitive load and clarifying ownership, AI became a shared organisational capability — and one that scaled.

Scaling AI Is a Product Challenge

As AI becomes embedded in real‑time systems — from agentic workflows to emerging AI‑powered wearables and smart glasses — the cost of poor design increases.

Scaling AI is not about doing more pilots. It is about rethinking how work happens, how decisions are made, and how humans and AI collaborate inside real products and organisations.

The organisations that succeed won’t be the ones with the most advanced models. They will be the ones that design AI to work — at scale, with people, and in the real world.