Everything you need to know about OpenAI’s Advanced Voice Mode: how it works, key features, and use cases

Intro

Voice AI feature is one of the most promising AI development technologies of recent years. This tech combines the parts of Conversational AI, NLP, and extends the power of text-based AI modifications.

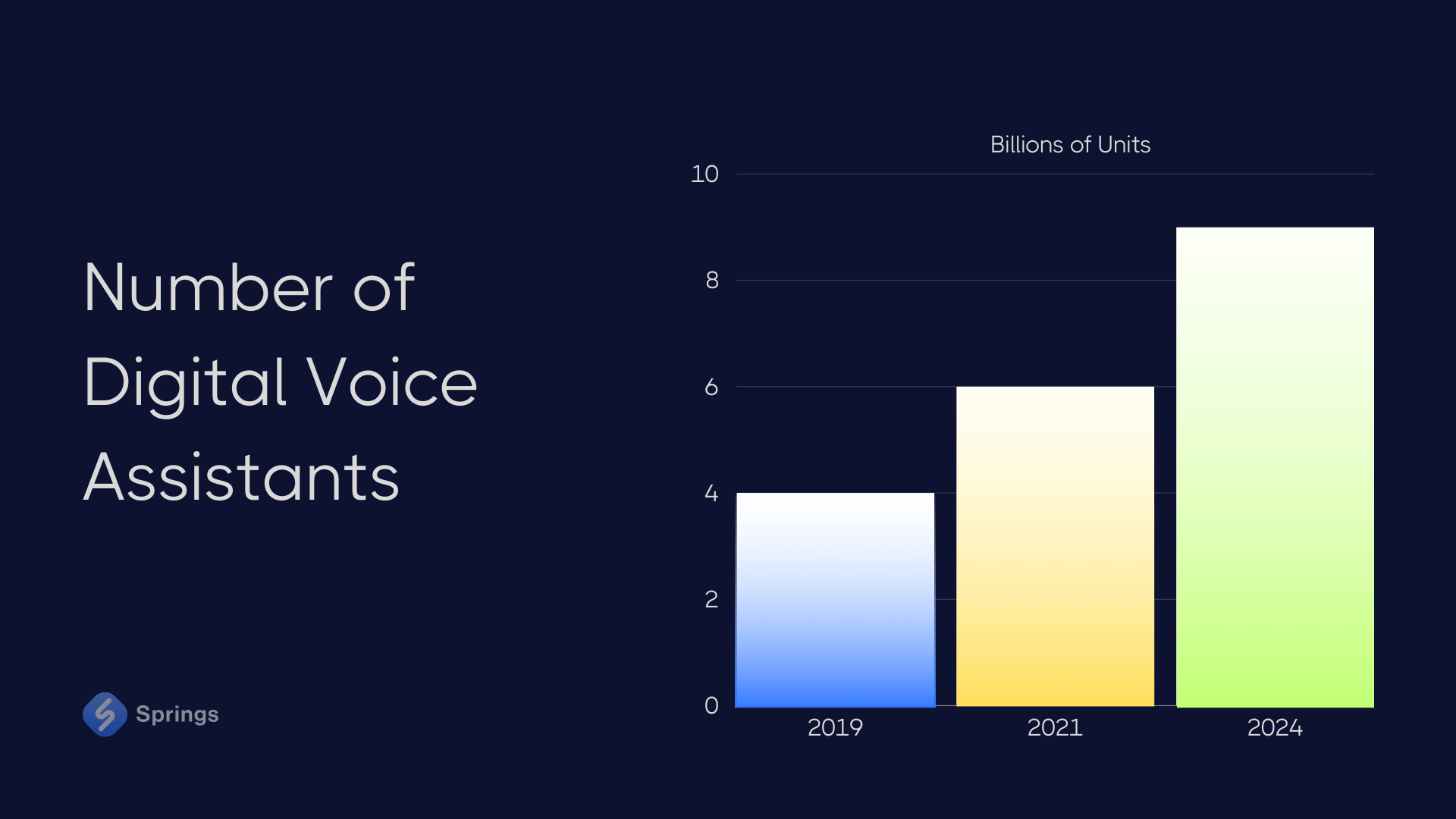

According to Statista, the number of digital voice assistants is expected to reach more than 8.4 billion units by 2024, signaling a transformative shift in how businesses can use AI daily. With advancements like GPT-4 Audio and OpenAI Audio, organizations have access to a new realm of possibilities.

Whether you’re integrating voice AI into your customer support helpdesk, website, or mobile app, or using it as any other AI Agent for business, these technologies offer endless opportunities. Additionally, using tools like GPT-4 Audio and OpenAI Audio can save your business significant time and resources, all while delivering a superior customer experience.

With the recent release of OpenAI’s Advanced Voice Mode, more and more business owners started thinking about its implementation in their companies. So, let’s try to find out how open AI voice mode works, and how it can be used.

What is Advanced Voice Mode?

ChatGPT Voice Mode is the feature of GPT LLM to open voice AI mode and interact with the LLM via human voice. To make it simple, now you may talk with ChatGPT using your mobile phone, laptop, or PC’s microphone.

Advanced Voice Mode is powered by the latest Open AI Voice technology, and designed to boost interactions through natural, conversational speech. By using Open Voice AI, this mode allows users to communicate with AI agents more intuitively, making the experience feel seamless and engaging, whether for customer support AI Chatbots, virtual assistants, or other voice-driven applications.

Under The Hood

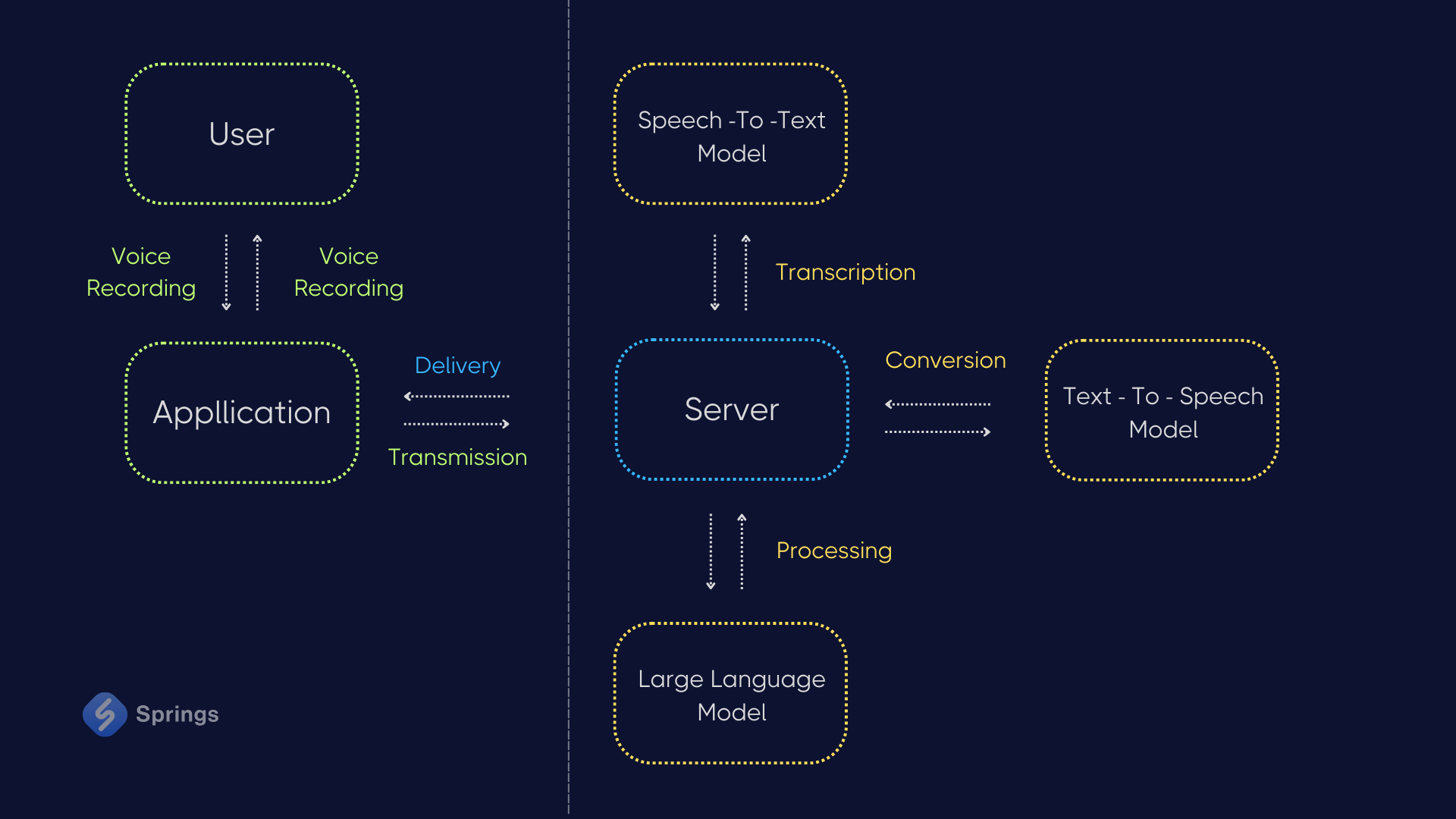

Before moving to the specifics and limitations of GPT 4 Audio, let’s stop for a moment to see how OpenAI Audio works under the hood, and why this is relevant for your business. Typically, these apps follow a structured process:

- Recording - the app records your voice and detects when you stop speaking.

- Transmission - the recording is then sent to a server.

- Transcription - a speech-to-text model transcribes the audio into text.

- Processing - the text is processed through a large language model to generate a response.

- Conversion - the response is converted back into audio using a text-to-speech model.

- Delivery - the voice recording is sent or streamed back to the app for playback.

Although streaming can reduce some latency, this process involves three different models:

- Speech-to-Text Model

- Large Language Model (LLM)

- Text-to-Speech

Please, have a look at the schema below to understand better the whole workflow:

As a result, there is often a small delay between when you finish speaking and when you hear a response. This lag can impact real-time interactions but is not critical, cause in many business areas, such as customer support or sales calls, you often wait some time before getting a response.

Let’s have a look at the other limitations we may expect.

The Limitations of ChatGPT Voice Mode

ChatGPT's Advanced Voice Mode offers significant potential for businesses, but some areas need attention before fully integrating it into your operations. Understanding these limitations can help you make informed decisions and be ready for the challenges.

- Conversational Etiquette. While the AI is pre-designed to be helpful, it can sometimes interrupt, which may disrupt the flow of customer interactions. It also struggles with multi-person talks, making it less effective in group settings where seamless communication is essential.

- Time Awareness. The current model cannot manage time-based tasks, such as setting timers or tracking durations. This could limit its usefulness in scenarios where precise time management is critical, like in project coordination or customer support tasks.

- Access to Information. Advanced Voice Mode does not yet have access to files, custom instructions, or memory, unlike the standard ChatGPT-4 Omni. This limits its ability to engage deeply with specific content or maintain context, which could affect its performance in handling complex customer queries or ongoing business discussions.

- Voice Imitation Concerns. It is worth mentioning that there have been instances where the AI unintentionally mimicked users' voices during testing. It raises potential security concerns, highlighting the need for robust safeguards to prevent unauthorized voice cloning.

While these limitations exist, understanding them can help you better plan for the future integration of ChatGPT in your business, ensuring that you are aware of the risks that may cause some issues that need to be solved.

Anyways, you can always start with the Discovery Phase and POC/MVP development or integration. There is no need to build a spaceship from scratch. Instead, we may start with a bicycle that will one day become a spaceship.

Don’t hesitate to contact us and dive deeper into the Discovery Phase and MVP opportunities. We would be happy to start a new journey with you together.

Key Features of Advanced Voice Mode

Before exploring the main features of OpenAI’s Advanced Voice Mode, it’s essential to understand two key concepts that could influence business communications: Voice Activity Detection and Emotion Tags. These innovations can improve how AI interprets and responds to voice interactions, making the technology more intuitive and effective for your business needs.

Voice Activity Detection

So, as soon as you activate voice mode and start speaking, the app starts recording your voice. Once you stop, it sends the recording to the ChatGPT APIs. This process of detecting when you start and stop speaking is called Voice Activity Detection or VAD.

In other words, this is a program that usually runs on your device and determines when to start and stop recording your voice. When it detects a stop or long enough pause, it packages up the recording and uploads it to the server. VAD helps reduce bandwidth by only sending sound samples that are likely to have voice input to the server and helps mark the end of the input so the steps to process that input can begin.

Additionally, VAD engines can be configured to wait longer or shorter amounts of time before the concluding speech has ended, but none of them act like humans and process the actual meaning of what is being said.

Emotion Tags

On the other end of the spectrum, modern voice synthesis algorithms implement the realism of Generative AI speech by incorporating emotional effects. This capability is extremely important for businesses looking to create more engaging and human-like interactions with their customers.

For example, many text-to-speech models, including the one used by ChatGPT, allow you to specify the emotion expressed in speech by adding meta tags. In some businesses, applications may meet a lack of expressiveness that could significantly decrease audience engagement. To address this, we can add instructions for the AI to assess the emotional content of each sentence before speaking, inserting an emotion tag like "[Surprise]" or "[Joy]" at the beginning.

For instance, when the AI outputs “[Surprise] Wow, that’s great!”, the text-to-speech model generates a tone that genuinely conveys surprise, improving the listener’s experience. By using this approach, custom AI solutions can convey various emotions such as excitement, anger, sadness, and joy, while adding depth to interactions that can resonate better with audiences.

This kind of emotional intelligence in AI can significantly improve customer engagement and brand loyalty, making it a valuable asset for any business.

Key Features

Now, we may move to the key features of ChatGPT’s Advanced Voice Mode. Let’s dive deeper into each of them.

- Natural Conversations. OpenAI’s Advanced Voice Mode enables real-time, fluid conversations that mimic human dialogue, including the ability to handle interruptions. This natural interaction can improve customer satisfaction and reduce friction in user experience, leading to increased customer retention and CSAT.

- Emotional Recognition. By detecting and responding to emotional cues in a user’s voice, the AI represents more empathetic interactions. This emotional intelligence boosts to the next level of customer support and sales, resulting in stronger client relationships and potentially higher conversion rates.

- Multiple Speaker Handling. This open AI voice model provides a possibility to make a dialog between various speakers in a conversation allowing it to maintain contextual accuracy in complex scenarios, such as multi-participant meetings or customer service calls. This capability can streamline communication processes and improve operational efficiency.

- High-Quality Audio Output. The use of a top-notch text-to-speech model ensures that the AI’s voice responses sound natural and clear, avoiding the robotic tone typical of AI-generated speech. This quality makes sure you have users’ trust and engagement, making interactions more pleasant and effective.

- Preset Voices. Offering a selection of AI-generated voices, businesses can choose the voice that best aligns with their brand identity. This flexibility helps maintain brand consistency while addressing many concerns, ensuring the ethical and reliable use of Open AI audio technology.

How to Use OpenAI Advanced Voice Mode

Overall, the process of using GPT 4 Audio mode is straightforward. To test ChatGPT Voice Mode, we just need to follow the steps below.

- Access ChatGPT Platform - feel free to open the ChatGPT platform, either through the mobile app or the desktop version. You may use your regular browser or mobile phone.

- Turn a Voice Mode On.

- If you are using the mobile app - locate the microphone icon on the chat interface.

- If you are using a desktop version - check the settings or options menu to ensure that Voice Mode is enabled.

Note! You may need to grant microphone permissions if prompted.

- Start a Conversation - tap the microphone icon and begin speaking your question or command. The AI should respond shortly.

Note! You can mute or unmute your microphone by selecting the microphone icon on the bottom-left of the screen. You can end the conversation by pressing the red icon on the bottom-right of the screen.

At any time, you can switch to the standard Voice Mode by selecting Advanced at the top of the screen.

- Adjust Settings (if necessary) - if the response quality or voice doesn’t meet your expectations, explore the settings to adjust parameters like speech speed, voice type, or microphone sensitivity.

Finally, don’t forget that usage of advanced Voice (audio inputs and outputs) is limited on a daily basis, and precise limits are subject to change. The ChatGPT app will output a warning when you have 3 minutes left of audio. Once the limit is reached, the conversation will immediately end and you will be invited to use the standard voice mode.

Actually, that’s it. Feel free to test Open AI Voice Mode on different devices and platforms to make sure it fits your needs and expectations.

Uses Cases for OpenAI Advanced Voice Mode

OpenAI's Advanced Voice Mode provides a variety of use cases across different types of businesses. Let’s have a look at them in detail.

Education & E-Learning

Education is one of the biggest hubs for AI Voice Agents integration. Educational businesses and e-learning platforms can deploy AI tutors to interact with students and provide personalized learning experiences. The AI’s ability to handle multi-speaker scenarios and offer empathetic responses makes it ideal for creating interactive, student-centered learning environments.

You may see how it works by testing our AI Agents Platform - IONI. It can be easily customized with a Real-Time AI Voice & Text Avatar Agent that can handle all the queries of students, teachers, and other members of the education process. Feel free to start a free trial right now!

Expected ROI: boosts students’ engagement, improves learning outcomes, and scales educational delivery at a lower cost.

Legal & Compliance

Legal firms can use OpenAI Audio Advanced Voice Mode to handle routine client interactions, such as scheduling consultations, providing case updates, and answering common legal inquiries. The NLP capabilities allow for more personalized client communication, while its emotional recognition features ensure that sensitive discussions are handled with appropriate empathy and care.

Moreover, GPT 4 Audio Mode can be integrated into document review processes, allowing legal teams to verbally query large amounts of documents or compliance materials. ChatGPT Voice Mode can process these queries, search through documents, and read relevant sections aloud, all while allowing for follow-up questions or instructions.

Expected ROI: reduces administrative burden, allows legal professionals to focus on more complex and billable tasks, speeds up document review processes, reduces errors, and improves overall efficiency, leading to faster case resolutions and compliance checks.

24/7 Customer Support

Millions of companies worldwide can implement Advanced Voice Mode in their customer support systems to provide more natural and intuitive Interactive Voice Response (IVR) experiences.

This option would be a good choice if you use AI Chatbot in your customer support workflow. It can handle routine inquiries, offer personalized responses, and seamlessly transfer more complex queries to human agents, reducing wait times and improving customer satisfaction.

Expected ROI: increases CSAT and customer experience, reduces operational costs, and improves first-call resolution rates.

Healthcare

Multiple healthcare businesses and providers can use Advanced Voice Mode to automate patient interactions, such as appointment scheduling, reminders, and follow-up calls. This ML's ability to detect and respond to emotions can also be used to provide empathetic support in sensitive conversations.

Expected ROI: improves patient adherence to appointments and treatment plans, reduces administrative workload, and enhances patient satisfaction.

Financial Services

Financial companies and firms can offer voice-activated AI advisors that assist customers with financial planning, account management, and investment advice. The pre-defined AI algorithms are able to make complex financial information more accessible to clients, ensuring trust and engagement.

Expected ROI: increases client retention and CSAT, improves upselling opportunities.

Real Estate

A lot of real estate businesses can use Voice AI to guide potential buyers through virtual property tours, answering questions and providing additional information. The ability of open voice AI to handle multiple speakers and respond naturally can significantly raise the buying experience, even remotely.

Expected ROI: shortens sales cycles, increases buyers’ engagement in the process, and reduces the need for in-person visits.

Overall, each of these Voice AI cases demonstrates how you can use ChatGPT Voice Mode to develop a more personalized customer experience and increase your company revenue.

Final Thoughts

OpenAI’s Advanced Voice Mode is the new step forward to the modern way of using AI in business. There are many ways it can be implemented today: from using it in customer support centers to the complex integration into AI Tutors or AI Avatars for education institutions. In other words, there are many ways Voice AI can be used together with AI Agents, and benefit your business.

As more and more companies continue to explore these AI integrations, the potential for improving customer interactions, boosting workflow, and ensuring ROIs become increasingly clear. The new features of Advanced Voice Mode allow it to be customized to the unique needs of various industries, making it a valuable asset for businesses looking to stay competitive in a rapidly evolving market.

By integrating this or similar AI technologies, businesses can not only improve their service offerings but also position themselves at the forefront of AI business process automation.