Revolutionizing Education with OpenAI’s Realtime API: AI Teachers are getting close-to-natural conversations

“Wow!” - that was my first impression when I saw an OpenAI update regarding the release of Realtime API. Introducing OpenAI’s Realtime API marks a significant step forward for teams and AI developers seeking to create dynamic experiences.

Whether you're building language apps, educational & training tools, or improving customer support, the Realtime API simplifies the process by offering a single solution for real-time conversational experiences without the need for multiple models.

In this article, I'm excited to guide you through how it works and explore its applications in various business scenarios. Together, we’ll dive into some fascinating examples and uncover how you can make the most of this incredible tool. So, let the journey begin, and let's discover new possibilities together!

What is OpenAI's Realtime API?

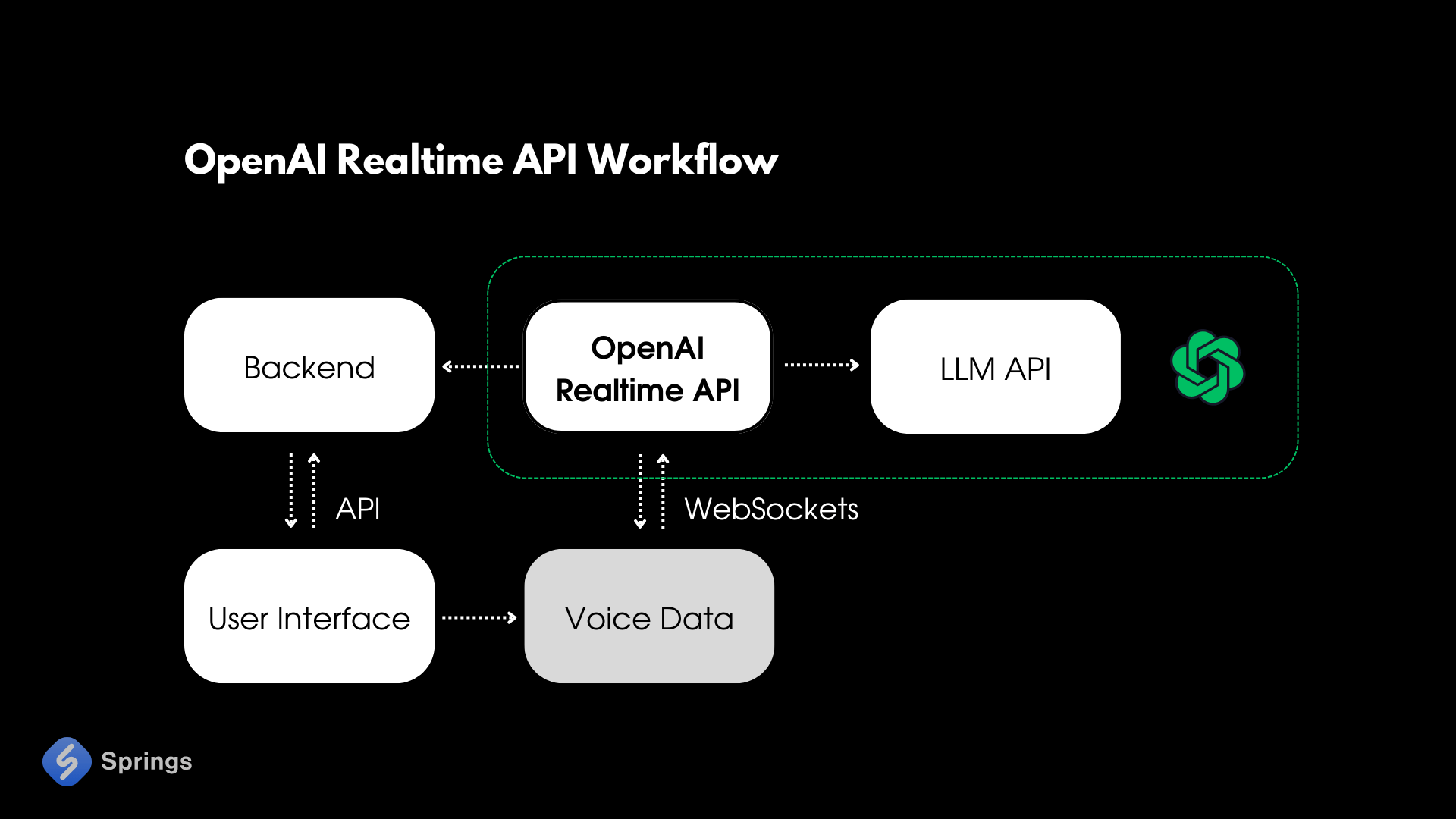

To keep things simple we may consider OpenAI's Realtime API as a tool that allows developers to stream audio inputs and outputs directly for more natural conversational experiences. It enables continuous communication with models like GPT-4o through a persistent WebSocket connection.

The key feature of Realtime API is its possibility to enable seamless integration of multimodal features, allowing for natural speech-to-speech conversations using six preset voices, similar to ChatGPT’s Advanced Voice Mode.

The main new features of this API are as follows:1

- Native speech-to-speech: No text intermediary means low latency and nuanced output.

- Natural, steerable voices: The models have a natural inflection and can laugh, whisper, and adhere to tone direction.

- Simultaneous multimodal output: Text is useful for moderation, faster-than-realtime audio ensures stable playback.

To better understand how realtime API can be integrated into the application, let’s have a look at the schema below:

While the Chat Completions realtime API significantly simplified this workflow by combining the steps into a single API call, it still lagged behind the natural speed of human conversation. OpenAI's Realtime API addresses these limitations by directly streaming audio inputs and outputs, providing smoother conversational interactions and automatically managing interruptions, similar to the functionality of ChatGPT's Advanced Voice Mode.

OpenAI's Realtime API: How it Works?

Let’s have a look under the hood. With the Realtime API, AI developers can set up a persistent WebSocket connection to communicate with GPT-4o, making it possible to exchange information continuously. The API supports function calls, which allows voice assistants to perform tasks or retrieve additional details based on the user’s needs.

According to the official OpenAI’s Realtime API Documentation, the Realtime API enables you to build low-latency, multi-modal conversational experiences. It currently supports text and audio as both input and output, as well as tool calling.

The WebSocket connection requires the following parameters:

URL: wss://api.openai.com/v1/realtime

Query Parameters: ?model=gpt-4o-realtime-preview-2024-10-01

Headers:

Authorization: Bearer YOUR_API_KEY

OpenAI-Beta: realtime=v1

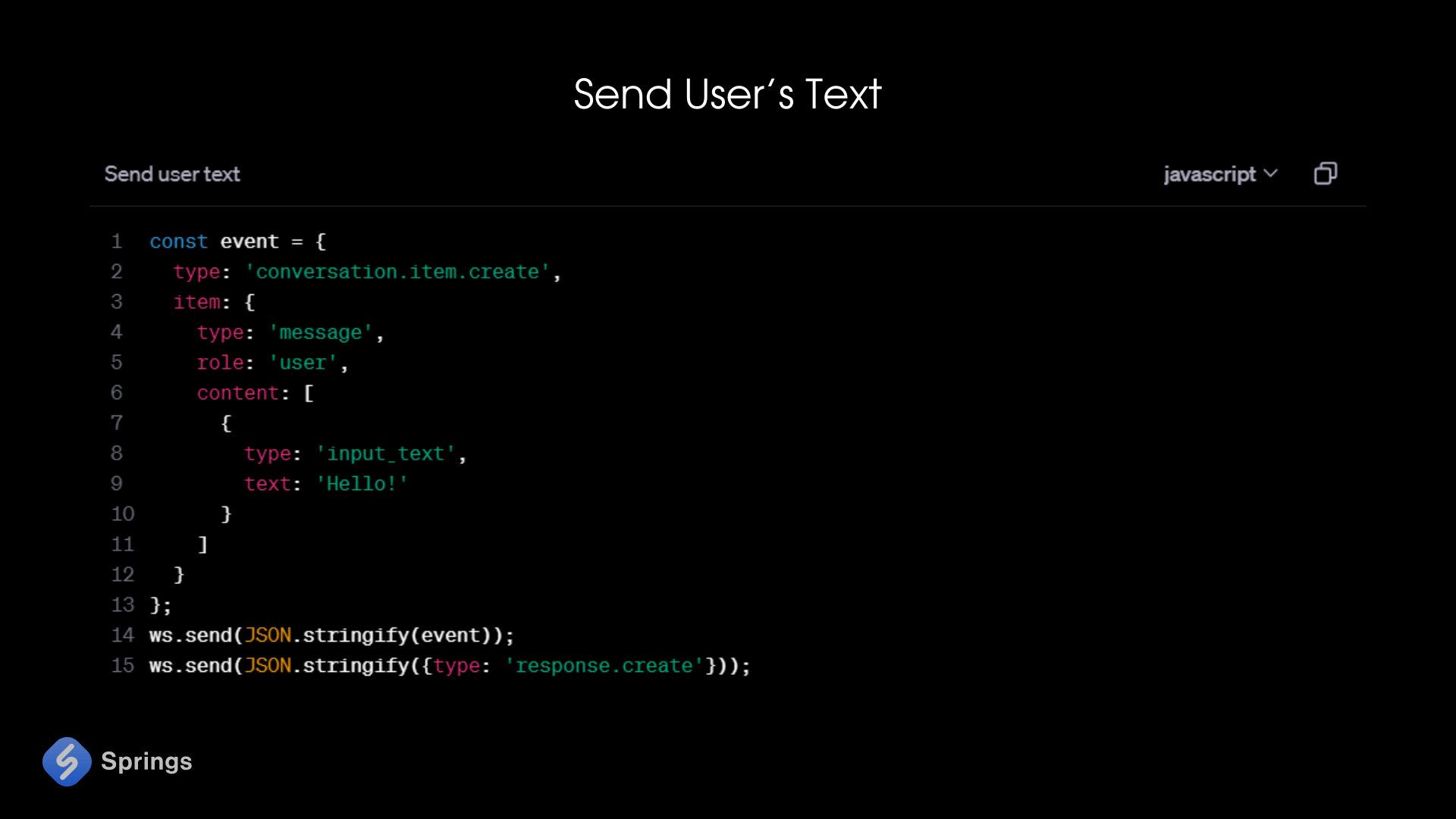

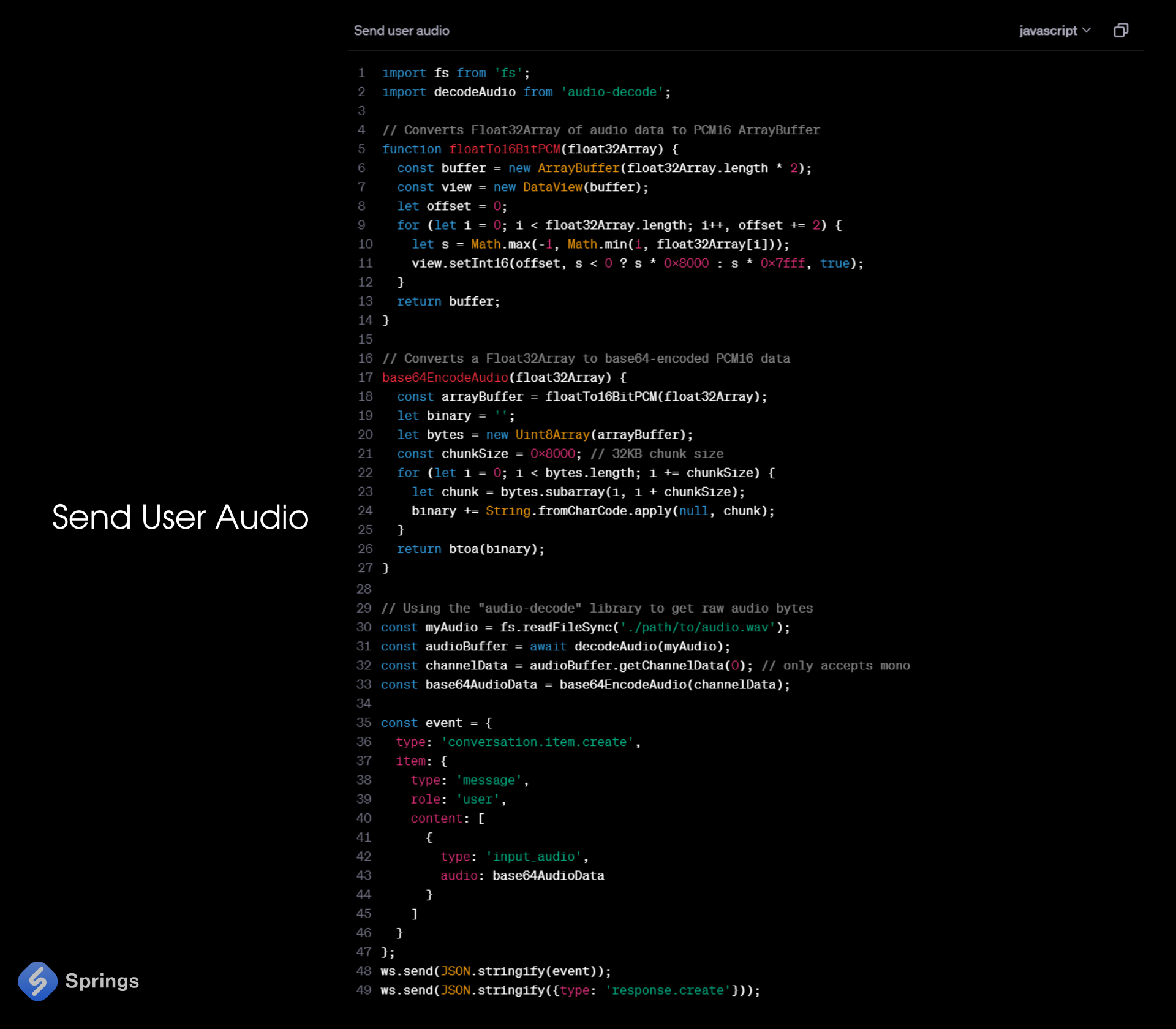

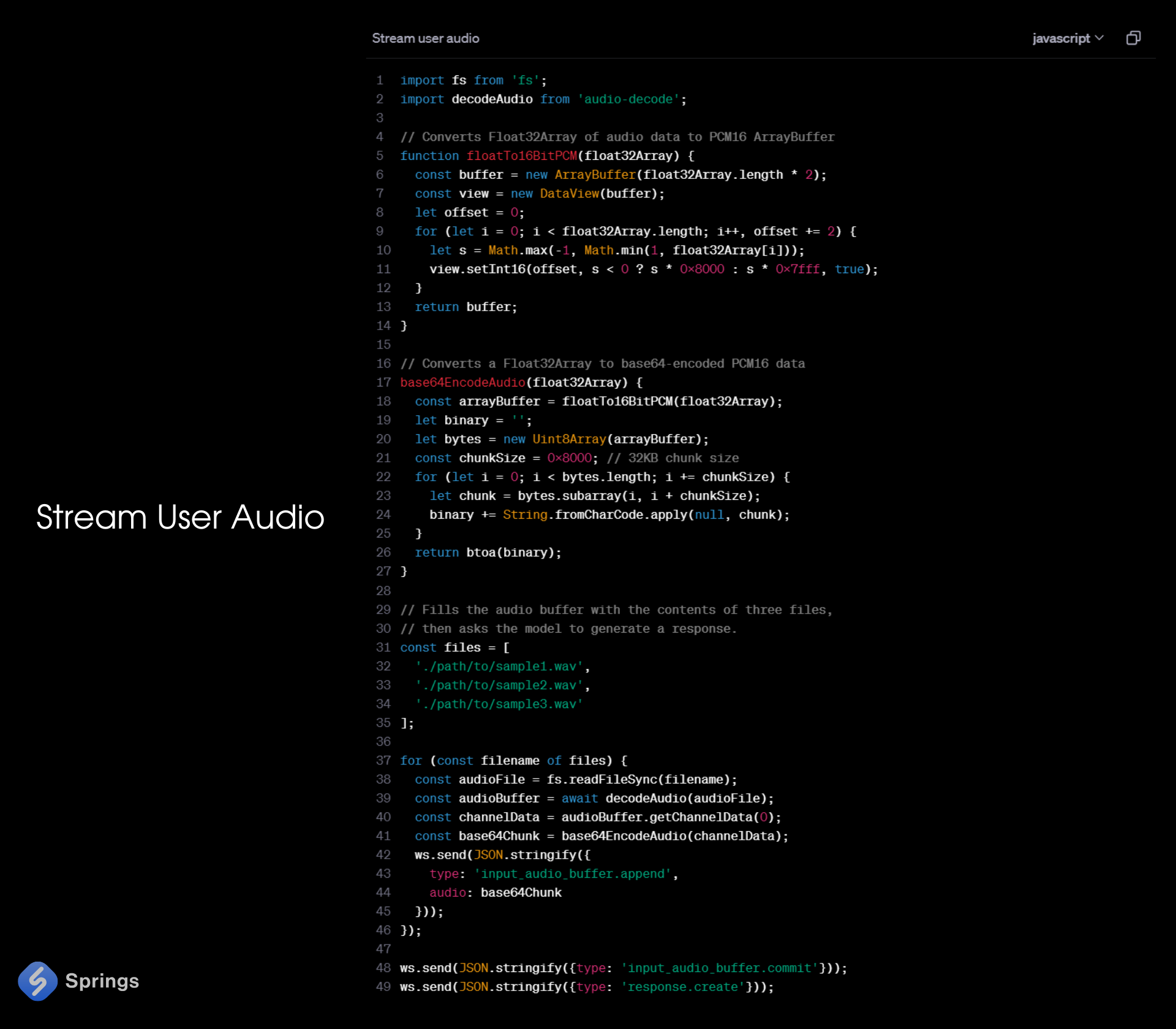

Moreover, OpenAI shows us examples of using API for different purposes (assuming you have already instantiated a WebSocket).

Send User’s Text

Send User Audio

Stream User Audio

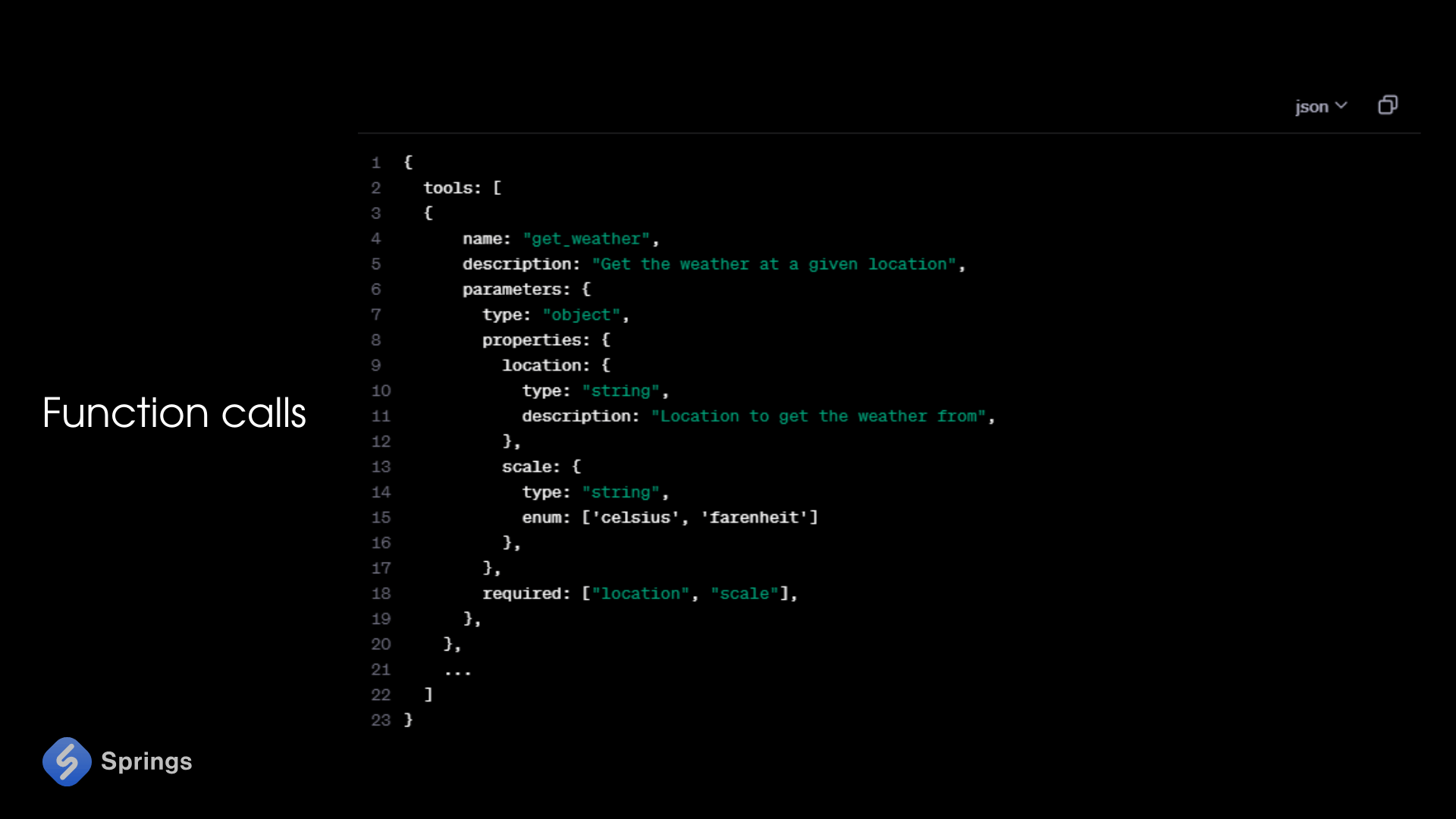

Calling Functions

Clients can choose standard functions for the server to use during a session or set specific functions for each response as needed. The server will use these functions to handle requests if it finds them suitable.

These functions are provided in a simple format, similar to the Chat Completions API, and no extra details are needed since only one type of tool is currently supported.

When the server uses a function, it can also respond with audio and text, like saying, “Ok, let me submit that order for you.” The function description helps guide the server on what to do, such as “don’t confirm the order is completed yet” or “respond to the user before using the function.”

The client needs to reply to the function call by sending a message labeled "function_call_output." Adding this doesn’t automatically start another response from the system, so the client may need to start one manually if needed.

Overall, OpenAI Realtime API examples in different industries, such as healthcare, HR, or legal, demonstrate how these features can help simplify voice-based applications. Let’s focus on the education sector as a great example of API usage.

Realtime API: Examples of Using in Education

Now, it’s time to talk about how all this magic works in real businesses and discuss realtime API examples in the Edtech industry. OpenAI has already provided ChatGPT Edu - the first LLM created for specific educational purposes. Realtime API has even more options to be used.

Realtime AI Teacher

OpenAI’s Realtime API can be effectively integrated into AI agents for educational & training purposes, enabling more interactive and engaging experiences for students. For instance, AI Teaching Assistants can facilitate spoken discussions and provide immediate feedback during practice sessions, helping students to understand concepts more thoroughly. Realtime API examples in educational settings include AI Tutors that can conduct voice-based quizzes, clarify questions, and guide study sessions, making the learning process feel more natural.

For example, our AI Agents Platform IONI allows you to create an agent to communicate with the AI Avatar of the Teacher/Tutor and get all the needed information about the course, lecture, study program, etc.

Additionally, integrating the realtime API into AI Learning Companions allows them to support students with disabilities by offering spoken instructions and responses, fostering a more inclusive environment.

Realtime AI Buddy

Using the API for AI Study Buddies can also aid in language learning by conducting conversational practice and pronunciation assessments. In language apps, the realtime API can simulate natural conversations, allowing learners to engage in realistic dialogue scenarios. The ability of AI agents to instantly process spoken language input and produce clear voice responses allows them to assist learners in practicing new languages, understanding cultural nuances, or even mastering specific professional jargon.

Realtime AI Instructor

For educational businesses, this technology opens new possibilities for AI in eLearning content delivery. AI Instructors can use OpenAI’s Realtime API to present audio lectures, answer student queries on the fly, or even conduct interactive seminars. This capability makes the learning experience more engaging for remote and in-person students, as they can conversationally communicate with the AI, almost like having a real tutor available at any time.

Moreover, the Realtime API lets educational chatbots assist educators with administrative tasks, such as automating attendance tracking through voice recognition. This allows teachers to focus more on instructional activities than routine tasks, creating a more efficient and enjoyable educational environment.

Safety Concerns and Challenges

OpenAI’s Realtime API fully provides multiple safety measures, using experiences from ChatGPT’s Advanced Voice Mode and many QA tests with external experts. These steps aim to minimize risks while providing reliable voice interaction. Let’s have a look at some important safety concerns and challenges of OpenAI Realtime API.

Monitoring for Misuse

The realtime API system employs both automated tools and human review to identify flagged content that may violate policies. This helps detect and address harmful or inappropriate uses.

Usage Restrictions

Developers are prohibited from using the API for malicious activities such as spamming or spreading false information, and violations can lead to service suspension.

User Transparency Requirements

API developers are required to clearly inform users when they are interacting with AI. This ensures that people know they are not speaking with a human, which can reduce confusion or unintended trust.

Privacy Measures

The Realtime API follows strict privacy commitments. Data is not used to train models without the user’s explicit consent, thereby protecting sensitive information and complying with privacy standards.

Finally, OpenAI officially created Usage Policies that should be followed by all teams that are using any of OpenAI’s services or APIs.

Future of Realtime API

We have to admit that OpenAI continues to improve the Realtime API day by day. And feedback is being gathered to guide improvements before it reaches broader availability. The initial release supports voice, with plans to expand into other modalities like vision and video in the future. Current limitations allow around 100 simultaneous sessions for top-tier developers, but this will gradually increase to accommodate larger-scale use cases.

Moreover, the Realtime API is planned to be incorporated into official OpenAI Python and Node.js SDKs, making it easier for ChatGPT developers to adopt. New features like prompt caching are also on the roadmap, allowing past conversation history to be reprocessed more cost-effectively.

The API's features will expand with support for LLMs like GPT-4o mini or even maybe GPT-4 Edu in upcoming releases, broadening the range of potential applications. These updates aim to empower developers in building new experiences for industries like education, translation, customer service, and accessibility.

However, we should not forget about the alternatives of OpenAI. Such giants as Google’s Gemini and Meta’s LLama won’t stay aside and will provide their updates too. As the Realtime API grows, it will play a crucial role in creating interactive, multimodal experiences that go beyond text.

Conclusion

Now, it is time to make some conclusions based on what we talked about. Obviously, the release of Realtime API by OpenAI starts a new era of updated AI agents, especially when we talk about such industries as Education, Information Technologies, Compliance & Legal, Logistics & Retail, Pharmacy & Healthcare, Customer Support, and Data Analysis.

Its new Websocket-Connection technology helps to provide smooth interaction between the user and the LLM in realtime format. We may forget about 3-4 level integration to make it possible to talk for a regular user with AI Avatar. Realtime API covers all these gaps and makes your software user-friendly.

OpenAI’s Realtime API opens new possibilities for engineers by simplifying the process of creating voice and video experiences. Its ability to directly connect users to AI avatars without complicated integrations makes technology more accessible. This ease of implementation is particularly valuable for businesses looking to enhance user interaction and provide seamless customer experiences.

Finally, OpenAI’s Realtime API boosts the way for more intuitive AI/ML development tools that are used for industry-specific needs.