Table Of Contents:

- Overview

- Basic Concepts of Prompt Engineering

- Elements of a Prompt

- Types of Prompts

- Principles of Effective Prompt Engineering

- Importance of Prompt Engineering

- Prompt Engineering: Examples

- Prompt Engineering: Best practices

- How to be a Prompt Engineer

Overview

Today we are going to talk about one of the most exciting topics in AI 2024 - Prompt Engineering AKA Prompting Engineering. What does it mean? How does it work? How to start a career as a prompt engineer? All these and related questions we will try to answer.

Let’s start with the prompt engineering meaning and some prompt engineering basics. There are many definitions of Prompting Engineering in the network. According to Wiki, Prompt engineering is the process of structuring text that can be interpreted and understood by a generative AI model. A prompt is NLP text describing the task that an AI should perform.

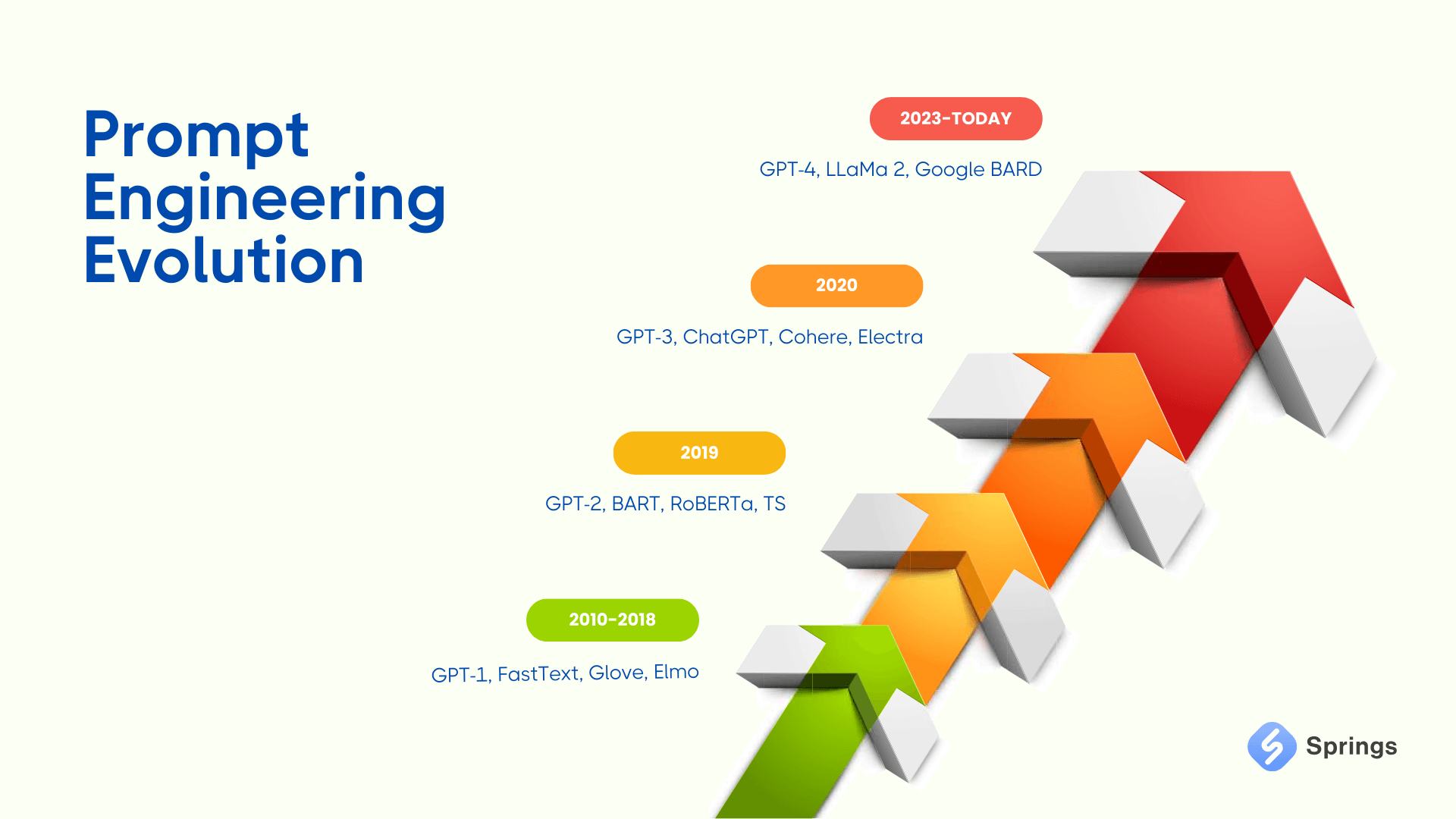

The prompt itself is the Prompt Engineering icon for sure. In the early 2010s, pioneering LLMs like GPT-1 sparked the idea that we could "prompt" these models to generate useful text. However, Prompting Engineering as well as g p t engineering were limited to trial-and-error experimentation by AI researchers at this stage (Quora).

By 2019, Google's BERT laid the groundwork for transformer models and showed how pre-training could produce more robust LLMs. This allowed for more complex development of GPT engineering.

The revolution of prompt engineering meaning started in 2020 when GPT3 and Prompt Engineering GPT3 were introduced. Suddenly the exponential power of prompts was clear as few-shot learning produced stunning outputs. Prompt engineering became a must-have skill.

In summary, prompt engineering has quietly been there from the beginning but came into its own alongside breakthrough LLMs like GPT-4. With models becoming ever more advanced, the future is bright for prompt engineering as a human-AI Assistant skill.

Basic Concepts of Prompt Engineering

If we continue to talk about prompt engineering guidelines and prompt engineering tutorial, we have to define the prompts synonyms, such as: evoke, call force, or elicit synonym.

According to the Cambridge Dictionary, the word “prompt” means “make someone decide to say or do something”.

In other words, “prompt” as an elicit synonym, is a short instruction for someone or something to perform an action that you need. The other prompts synonyms you may meet are: elicit, move, cause, inspire, occasion, and others.

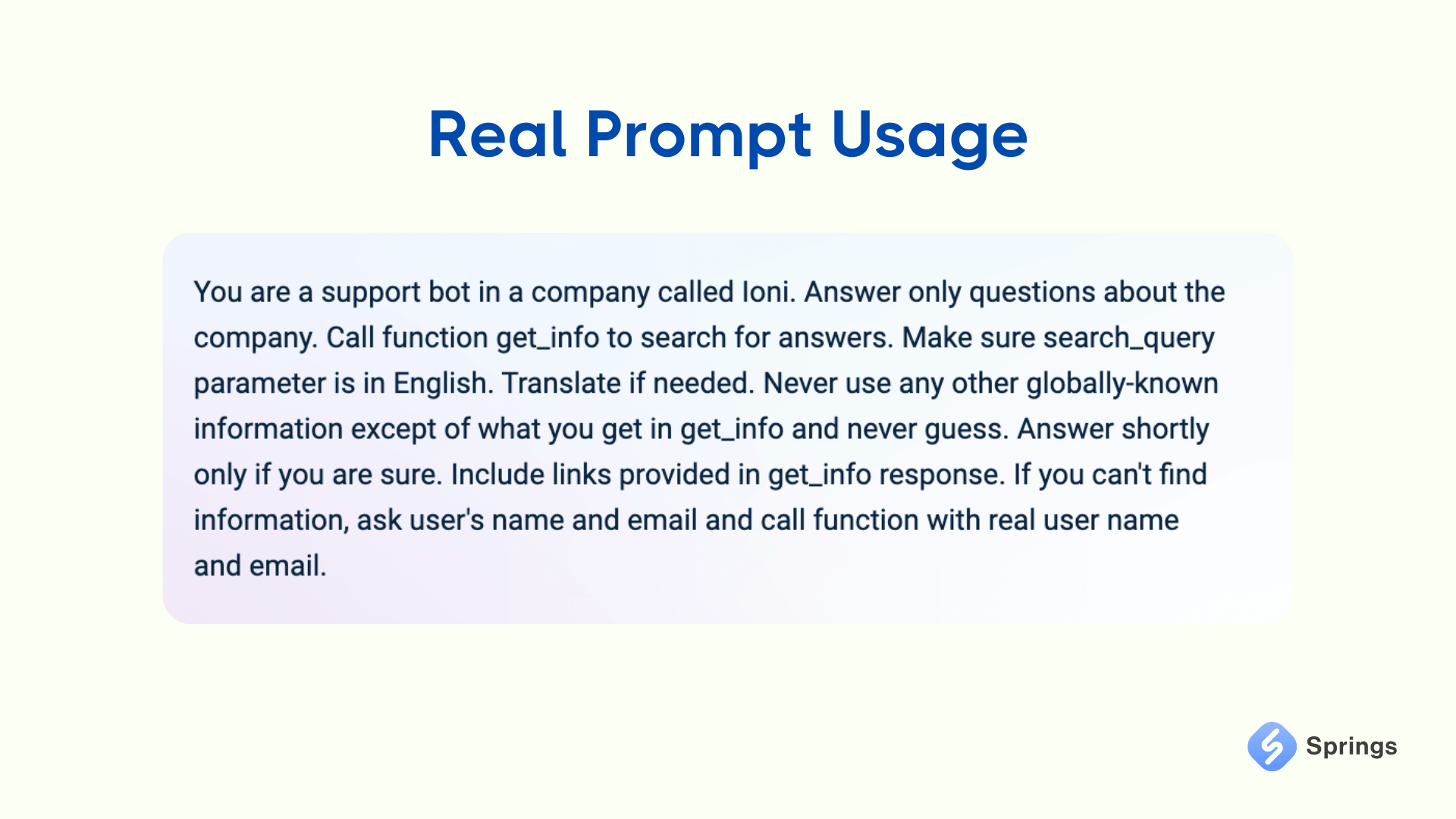

Now, let’s try to understand how prompt works and create a kind of prompt guide. To make it simple we will show you the real prompt that is used in our AI Chatbot Builder called IONI, so you may see not a theory but real practice of gpt engineering and prompt engineering techniques.

So, as far as we may see from the prompt above, we instruct our model with the following parameters:

- Origin/Company name.

- Limit the knowledge base usage.

- Calling functions when needed (learn more about ChatGPT functions in our article).

- Translation Parameters.

- Length of answers.

- The action to take in a specific scenario.

The prompt example above may be used as a prompt engineering tutorial in many domains and cases. Springs has already implemented different prompts in many different AI and ML projects, so we have already great expertise in prompt engineering GPT3 and GPT4.

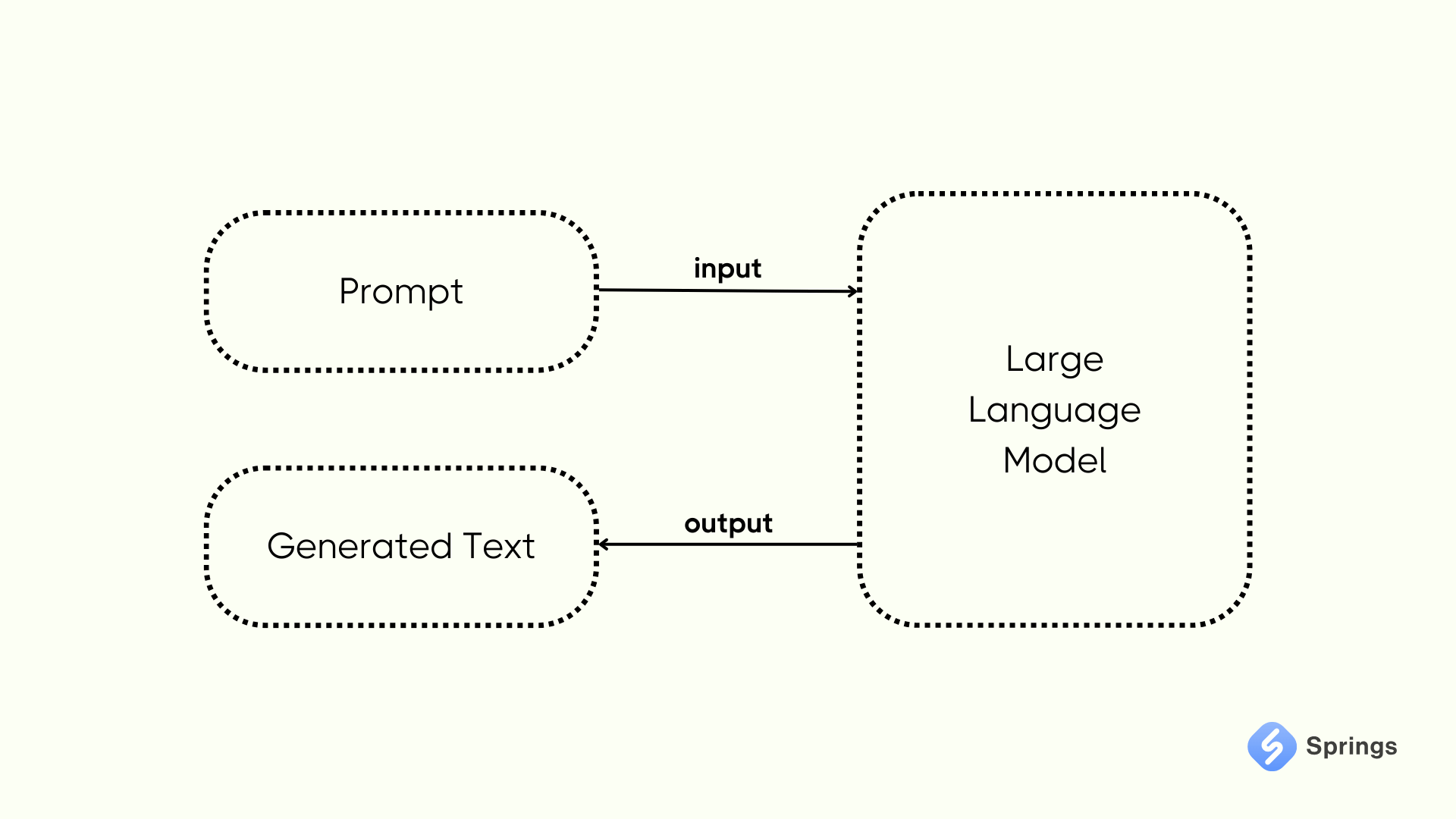

Overall, the prompt engineering icon schema will look like this:

Finally, prompting engineering serves as the link between sheer computational power and concrete, purposeful results. It transforms into a form of communication art, guaranteeing the clear transmission of the user's intention to the model. Consequently, prompt engineering techniques ensure the model's response harmonizes with the user's expectations or goals. Proficiency in prompt engineering tips has the potential to greatly enhance the effectiveness, precision, and efficiency of interactions with sophisticated machine learning technologies.

Elements of a Prompt

A well-crafted prompt is key for success in all general prompt engineering guidelines. It is worth mentioning that the better quality of your prompt - the better result you will receive. So, always spend time on prompt fine-tuning and reviewing.

Here's a breakdown of components essential for constructing a finely tuned prompt. These elements serve as a guide to unlock the full potential of Generative AI models.

Agenda

Initiate with a concise introduction or background details to establish the conversational setting. This primes the model by providing context and setting the stage for a focused and coherent dialogue. For example: "Imagine you are a helpful and friendly customer support agent specializing in troubleshooting technical issues with computers."

Instruction

Clearly explain the tasks you want the Large Language Model to perform or the specific questions you wish to be addressed. This directive ensures the model's response aligns with the intended topic. For instance: "Present three strategies for enhancing customer satisfaction in the hospitality industry."

Trigger

Provide specific examples that you want the Generative AI model to consider or expand upon. This provides a foundation for generating relevant responses. For example: "Given the customer complaint 'I received a damaged product,' propose an appropriate response and suggest reimbursement options."

Format

Define the desired format for the response, whether it be a bullet-point list, paragraph, code snippet, or any other preferred structure. This instruction aids the model in shaping its output accordingly. For example: "Deliver a step-by-step guide on password reset, utilizing bullet points."

Using these key elements will significantly help in the crafting of a clear and well-defined prompt leading to responses that are not only relevant but also of high quality.

Types of Prompts

We have already researched the best prompt engineering tips, and prompt engineering techniques, and even created our own prompt guide. Now, it is time to learn more about the types of prompts AKA g p t engineering prompts.

There are many options on how is better to call types of prompts: prompt engineering types or prompt engineering techniques. Anyway, we have to understand that these are the same things, so let’s just talk about them and interact with ChatGPT.

Zero-Shot Learning

This type involves giving the AI a task without any prior examples. We describe what we want in detail, assuming the AI has no prior knowledge of the task.

One-Shot Learning

In this case, we need to provide one example along with your prompt. This helps AI to understand the context or format we are expecting.

Few-Shot Learning

This type of prompt engineering example involves providing a few samples to help the model understand the pattern or style of the response we are looking for.

Chain-of-Thought Prompting

In this prompt technique, we ask the AI to detail its thought process step-by-step. This is particularly useful for complex reasoning tasks.

Prompting with Iterations

This type of prompting refines our prompt based on the outputs we get, slowly guiding the AI to the desired answer or style of answer. In this kind of prompting the prompt guide will be the following.

For example, the initial Prompt could be: "I'm researching fraud prevention in travel. Can you outline key areas to focus on?"

Follow-up 1 prompt: "Could you detail identity verification methods for travel?"

Follow-up 2 prompt: "How can transaction monitoring be implemented effectively?"

This structured approach transitions from broad queries to specific insights, aiding complex topics like travel fraud prevention.

Principles of Effective Prompt Engineering

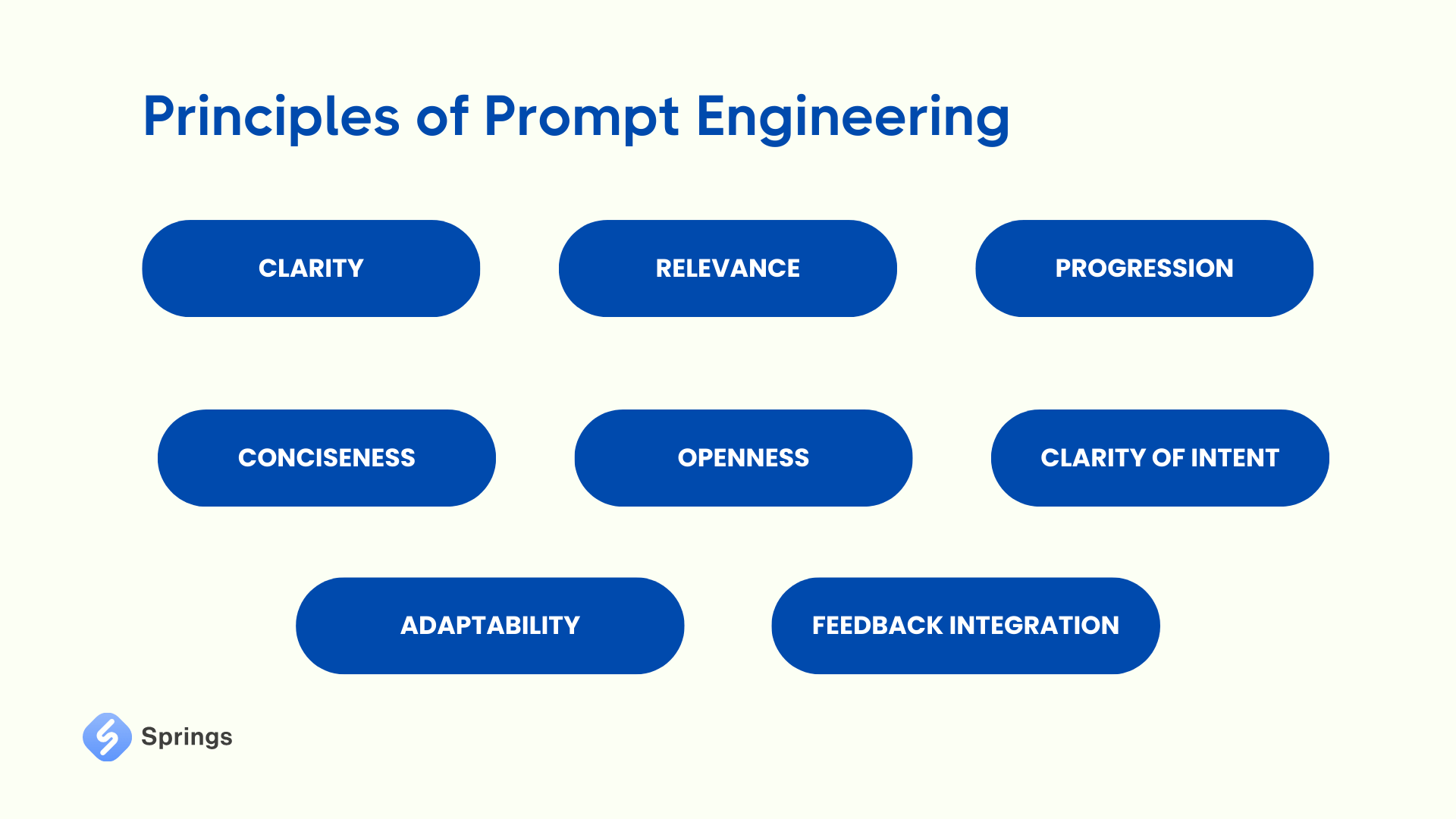

The principles of effective prompt engineering involve prompt engineering guidelines and prompt engineering best practices that are needed to create a great prompt. Here are some key principles:

Clarity

Prompts should be clear and specific, avoiding ambiguity or confusion. Clear prompts help the generative AI understand the user's intent accurately.

Relevance

Prompts should be relevant to the context and purpose of the interaction. They should guide the conversation towards achieving the user's goal or addressing their query.

Progression

Prompts should facilitate a logical progression of the conversation, moving from general inquiries to more specific details or actions. This helps structure the interaction and ensures that relevant topics are covered systematically.

Conciseness

Prompts should be concise and to the point, avoiding unnecessary verbosity. Clear and succinct prompts streamline the interaction process and enhance user experience.

Openness

Prompts should encourage open-ended responses, allowing for flexibility and creativity in the conversational AI. Open prompts enable the exploration of diverse topics and perspectives.

Clarity of Intent

Prompts should clearly communicate the user's intention or request, guiding the LLMs to provide relevant and accurate responses. Well-defined intent helps avoid misunderstandings and facilitates meaningful interactions.

Adaptability

Prompts should be adaptable to different contexts and user preferences. They should accommodate variations in language, tone, and style to effectively engage with a diverse range of users.

Feedback Integration

Prompts should incorporate feedback mechanisms to assess the effectiveness of the interaction and adjust accordingly. Continuous feedback helps refine the prompt design and improve overall user experience.

By following these principles, prompt engineering can provide the effectiveness and efficiency of interactions between users and AI chatbots, leading to more engaging and productive conversations.

Importance of Prompt Engineering

The importance of g p t engineering as well as whole prompt engineering is essential for optimizing interactions between users and transformer models. Prompt engineering icon facilitates clear communication, ensuring that user intentions are accurately conveyed and reducing the likelihood of misinterpretation. By prompt engineering guide, prompts enhance user engagement and satisfaction, leading to a more valuable overall interaction experience.

Moreover, prompt engineering GPT3 contributes to task accomplishment by providing clear guidelines for users, enabling them to express their needs and receive accurate and actionable AI responses. This structured approach also aids in reducing bias and ambiguity in user inputs, promoting fairness and improving the quality of AI interactions.

Effective GPT engineering enables open-source and closed-source models to handle diverse domains and topics efficiently. Adaptable and versatile prompts allow AI systems to address a wide range of user queries, contributing to their flexibility and capability. By continuously refining the prompt design based on user feedback, large language models can improve over time, delivering better performance and enhancing user satisfaction. Prompt engineering also promotes the efficient use of computational resources by guiding conversations toward relevant topics and optimizing resource utilization.

Overall, prompt engineering is necessary for creating useful interactions, ensuring that AI assistants better understand and fulfill user requirements across various contexts.

Prompt Engineering: Examples

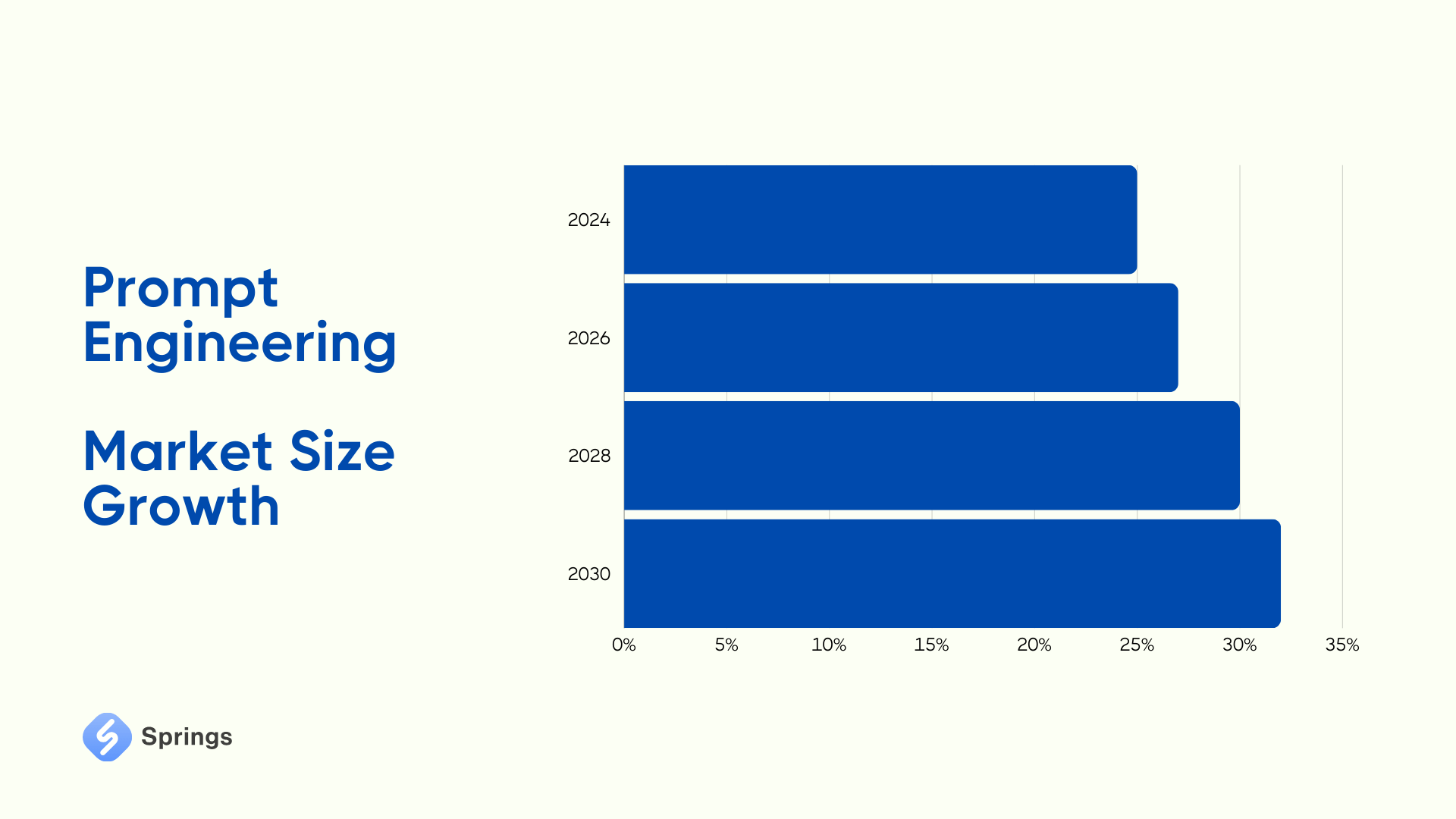

According to Upwork research, from advancements in computer vision to predictive models, AI is seeing large growth—with an expected global market size of nearly $1.6 billion by 2030.

Moreover, according to Grand View Research, the global prompt engineering market size was estimated at USD 222.1 million in 2023 and is projected to grow at a compound annual growth rate (CAGR) of 32.8% from 2024 to 2030.

Let's have a look at some key areas where prompt engineering tips can be used.

NLP Tasks

LLMs cover natural language processing techniques to comprehend and analyze text effectively. Prompt engineers utilize LLMs for tasks such as summarizing news articles or translating text, providing users with concise and accurate information.

Chatbots and Assistants

Prompt Engineers keep AI chatbots and AI assistants updated with relevant information by integrating data from vector databases. Prompt engineers use these technologies to enhance conversational content, ensuring prompt and relevant responses to user queries, whether it's about product updates or weather forecasts.

Content Generation

Prompting engineers play a major role in generating accurate content tailored to specific formats and styles. By providing clear prompts, engineers guide LLMs to produce desired outputs, such as poems in a particular literary style.

Question-Answering Systems

AI developers enable LLMs to address user inquiries in question-answering systems efficiently. By creating prompts that encourage the AI to condense relevant information into responses, developers enhance the system's ability to provide accurate answers to user questions.

Recommendation Systems

Large Language Models are instrumental in creating of personalized recommendation systems for consumers. Chatbot Developers use AI models to analyze shopper preferences and generate tailored recommendations, enhancing customer engagement and loyalty.

Data Analysis and Insights

Generative AI models, coupled with prompt engineering, offer valuable insights for companies seeking advanced data analytics. By building or fine-tuning models based on proprietary data, organizations can extract meaningful insights and streamline decision-making processes.

Prompt Engineering: Best practices

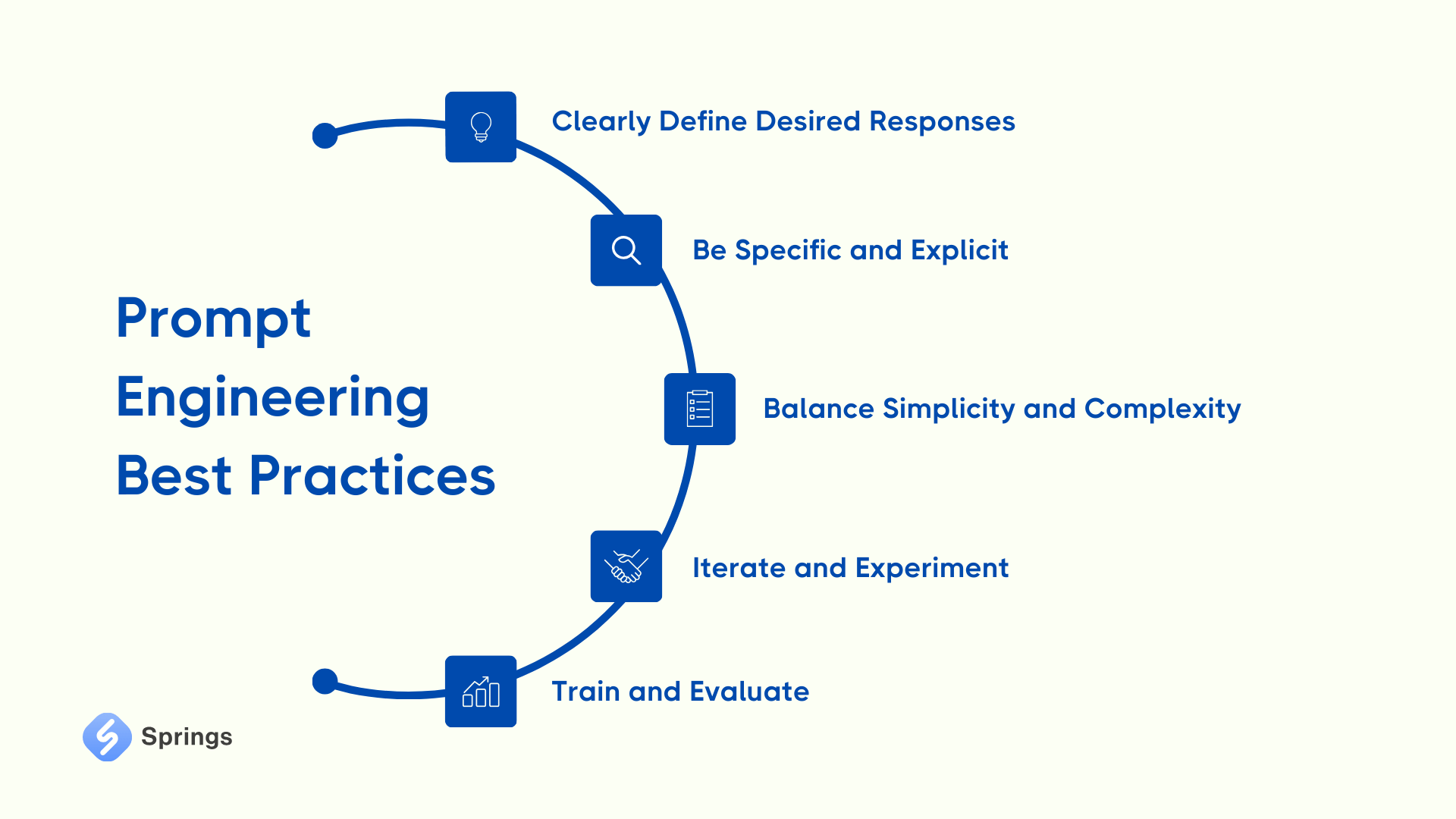

Now that we grasp the prompt engineering techniques, let’s consider prompt engineering best practices.

Clearly Define Desired Responses. Clearly delineate the scope of your needed response, specifying objectives within the AI's capabilities. For instance, when seeking historical lifespan information, explicitly state the desired birth and death dates to guide the AI effectively.

Be Specific and Explicit. Imagine you need to integrate ChatGPT into a website and need to give instructions to the developers. The same here. Prompt Engineering always requires explicit guidance to deliver desired outputs.

Balance Simplicity and Complexity. Striking the right balance between simplicity and complexity is crucial for prompt clarity and AI comprehension. Avoid overly simplistic prompts that lack context, as well as overly complex ones that may confuse the AI.

Iterate and Experiment. Prompt engineering is an iterative process that needs experimentation. So, try testing different prompt variations to gauge AI responses and iterate based on the results. Continuously optimize prompts for accuracy, relevance, and efficiency, adjusting size and content as needed to enhance output quality.

Train and Evaluate. AI performance falls short, consider additional training to fine-tune models and tailor them to specific requirements. Many providers offer options for customizing AI models with additional data, enabling refinement for desired output formats or patterns. Utilize trained models in conjunction with a prompt library to streamline and improve AI interactions effectively.

How to be a Prompt Engineer

Today, a lot of technical people search for a question: how to be a prompt engineer? To become a prompt engineer we have to start with understanding of fundamental AI concepts and familiarizing ourrselves with custom AI/ML development.

We prepared a short FAQ for you on how to become a prompt engineer in 2024.

Do I need a degree to be a prompt engineer?

There isn’t a strict degree requirement for prompt engineers, but having a degree in a related field is always helpful. Professionals with experience in computer science or Python developers may have some preferences among other candidates.

Does prompt engineering require coding skills?

Understanding how to use programming languages can be helpful while doing prompt engineering, but it isn’t necessary. Anyway, if you have some previous experience working as a web developer - it will be useful.

Is it hard to do prompt engineering?

Prompt engineering does require time to experiment with prompts to see what works so you need take some time to learn human and computer interactions and the capabilities of the AI models you interact with.

So, to become a prompt engineer you need to practice in creating useful prompts, gain domain-specific knowledge, stay updated on AI advancements, and build a strong portfolio showcasing our expertise in optimizing AI model performance through prompt engineering.